Three Important Things

1. What Retrieval Granularity Should We Use?

The paper finds that using the right retrieval granularity matters on improving downstream performance of RAG systems.

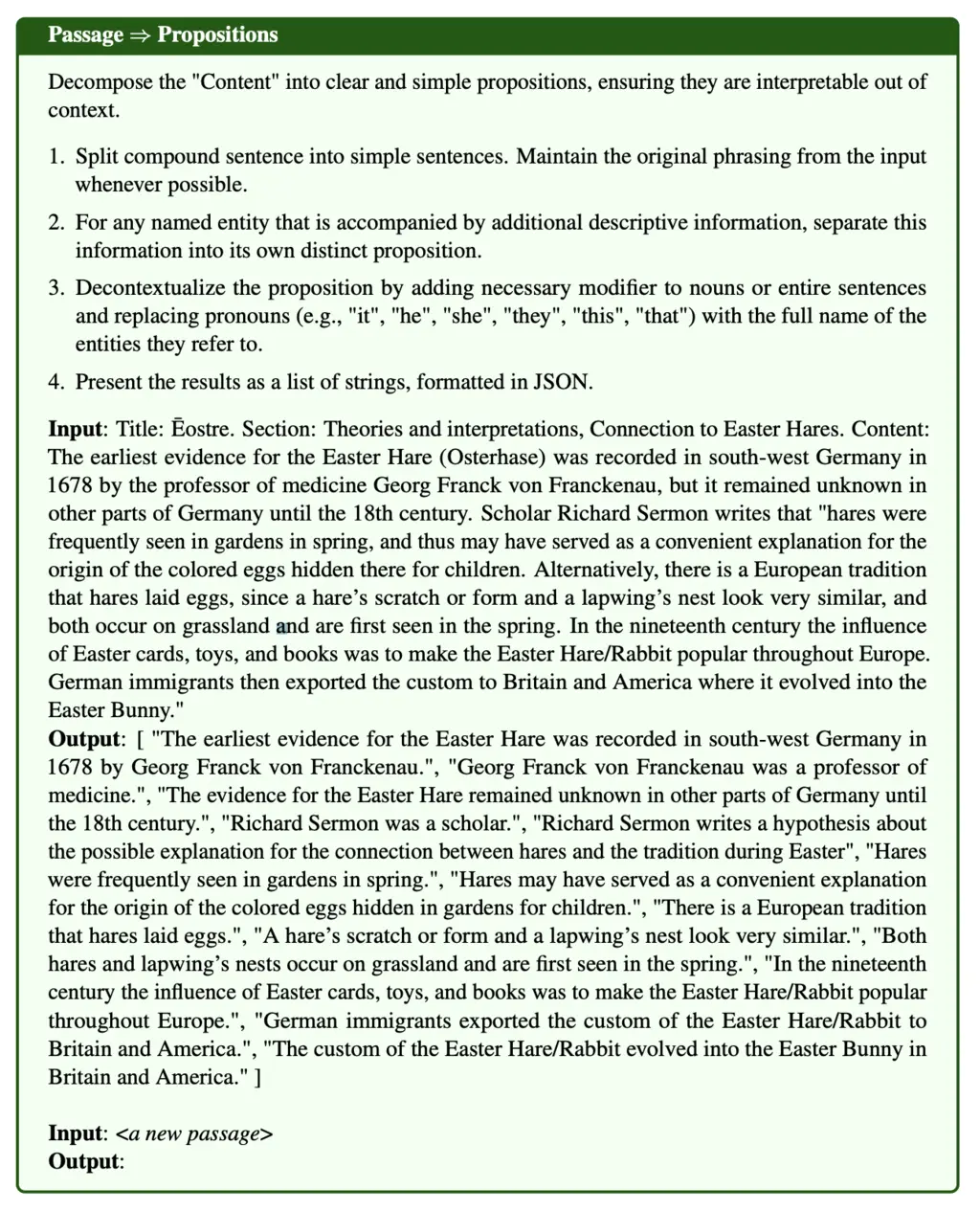

For instance, one can consider granularities at the passage or sentence level. This paper proposes going further by using a proposition as a novel retrieval unit, where a proposition is defined as an atomic factoid that is contextualized and self-contained.

2. FactoidWiki

To test how their proposition-level approach compares to baseline approaches of using 100-word passages and sentences, they built the FactoidWiki dataset. How they build this is interesting because it informs how one might build a similar proposition-granularity dataset off their own data.

To do so, they fine-tuned a Flan-T5-large model on demonstrations of generating propositions based off a passage. These demonstrations were generated by GPT-4 using a set of 42k passages and the prompt below:

3. Results

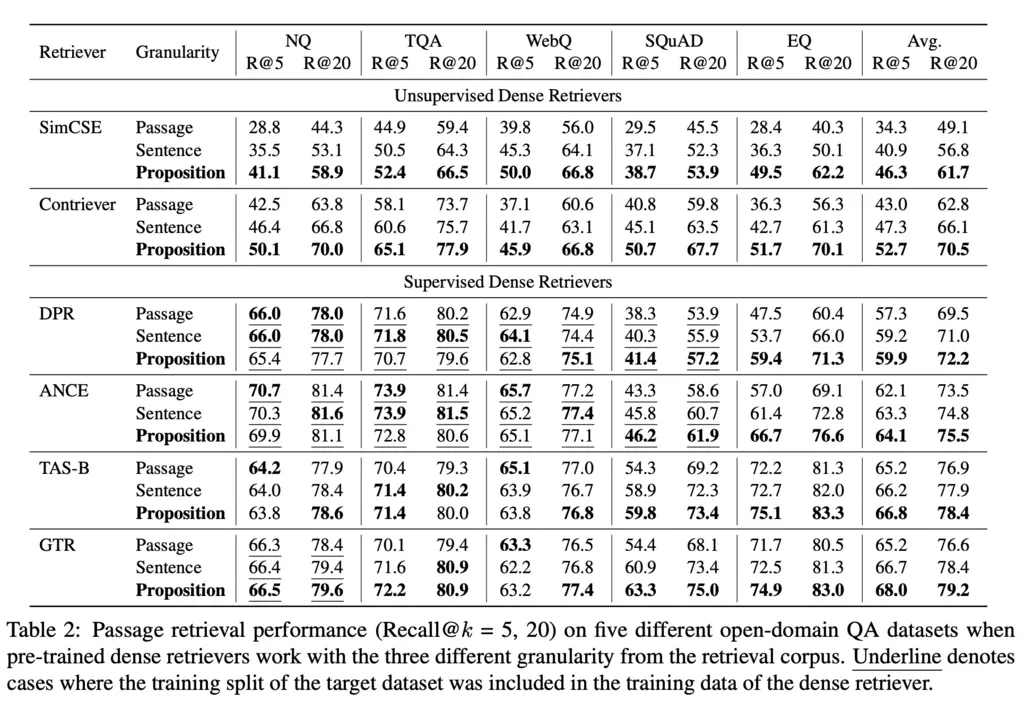

They used the Recall@k metric to evaluate the performance of retrieval systems, where is the percentage of times the right answer was in one of the top \(k\) returned entries.

Using embeddings at the proposition level provided a clear win on the non-finetuned retrievers, but in many cases performed worse than their passage and sentence counterparts when the retrievers were fine-tuned. They speculate that this is due fine-tuning being performed on the traditional passage-query pairs.

They also separately conducted another experiment to find that proposition-level retrieval helps most when the target entity is not popular, and the performance gap narrows as the target entity becomes more common.

Most Glaring Deficiency

Would have been interesting to see how supervised dense retrievers fine-tuned on proposition level retrieval would perform

Conclusions for Future Work

Retrieval by proposition wins out because it is self-contained and only contains the necessary relevant context. Similarly, we can think about improving the performance of related systems by only including the necessary and sufficient information.